Rubber Duck Debugging with LLMs

Adolfo Benedetti - Apr 2, 2024

Introduction

Rubber duck debugging is the practice of explaining code to an inanimate object like a rubber duck. In our team we often use this approach given that verbalizing the problem/code often helps programmers gain insight into bugs and logic errors. Large language models (LLMs) can act as virtual rubber ducks for debugging, even if the model is not trained on a brand-new technology. This blogpost explores prompt engineering techniques to optimize LLMs for rubber duck debugging. I strongly recommend following the free short course on Prompt engineering for developers and the new one on Prompt engineering with llama 2.

Crafting Rubber Duck Debugging Prompts

Effective rubber duck debugging prompts should:

Clearly explain the expected program behavior vs. actual behavior.

Walk through code snippets line-by-line.

Adopt a naive listener persona - imagine explaining to a friend.

Ask questions to elicit new perspectives.

Provide examples of possible bugs to prompt insights.

Role-Based Rubber Duck Personas

Different listener personas can be adopted based on roles and expertise levels. For example, you can use the following personas to guide the LLM's response:

New programmer: Explain code simply as if to a beginner.

Non-programmer: Explain logic and goals non-technically.

Debugging assistant: Adopt a helpful tool's perspective.

Example prompts:

"As a new programmer, can you help me understand what this code is doing?"

"Imagine you have no coding experience. Can you explain why my program crashes?"

"As a debugging assistant, could you walk through steps to fix this recursive Fibonacci function that crashes for large inputs?"

1 2 3 4 5 6 7def fibonacci(n): if n <= 1: return n else: return fibonacci(n-1) + fibonacci(n-2) print(fibonacci(100))

Applying Rubber Duck Debugging

LLMs can aid debugging in languages like:

Identifying logic errors in Python code.

Explaining runtime errors in Java snippets.

Tracing through C++ programs line-by-line.

The key is prompting the LLM to methodically walk through code and illumination gaps in understanding.

Iterating on Rubber Ducking Prompts

Like any hypothesis, prompts should be tested and refined:

Observe which personas elicit the most insights.

Try different wordings and examples.

Check consistency of responses.

Rubber Ducking to Help the LLM

Let's hallucinate together this time. Remember, this is not about getting the correct response immediately, but about the process we can use to get to that. Now, let's use this approach the other way around. So, we will paraphrase a problem, and when building the prompt, we also unlock insights of the problem itself. Understanding the importance of protecting your/your client's intellectual property, here's the beauty of this approach: you don't necessarily need to upload any actual code! Let's have a hypothetical prompt with the approach described above.

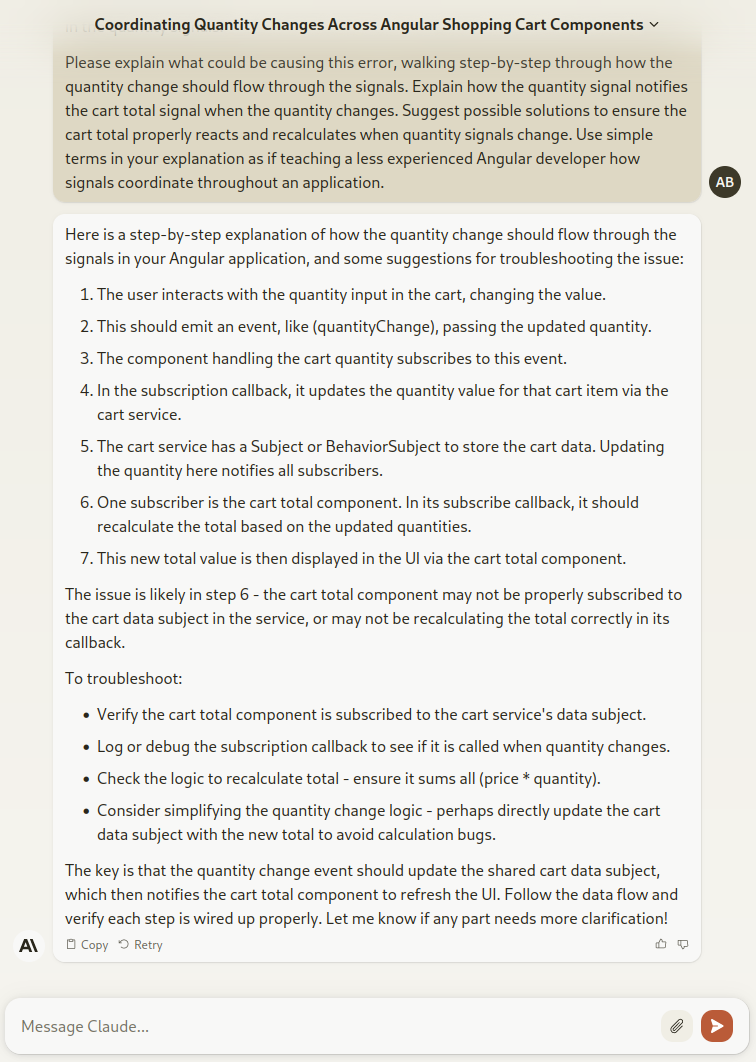

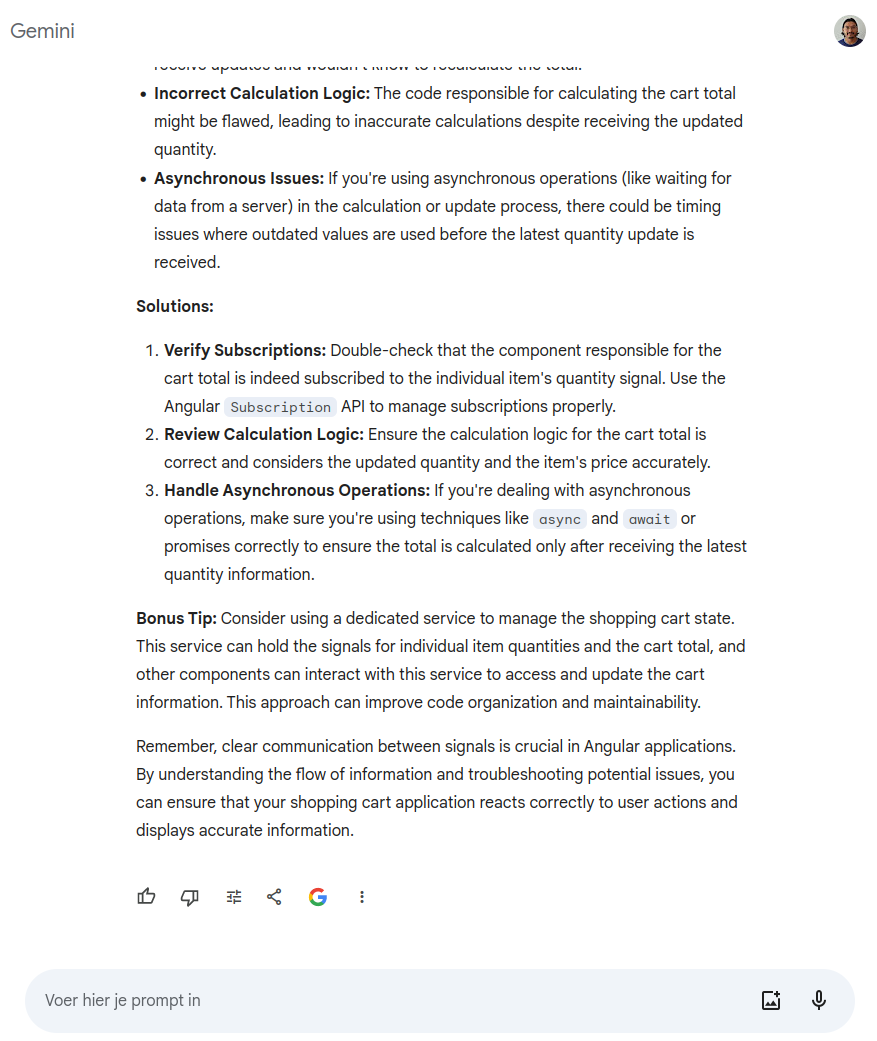

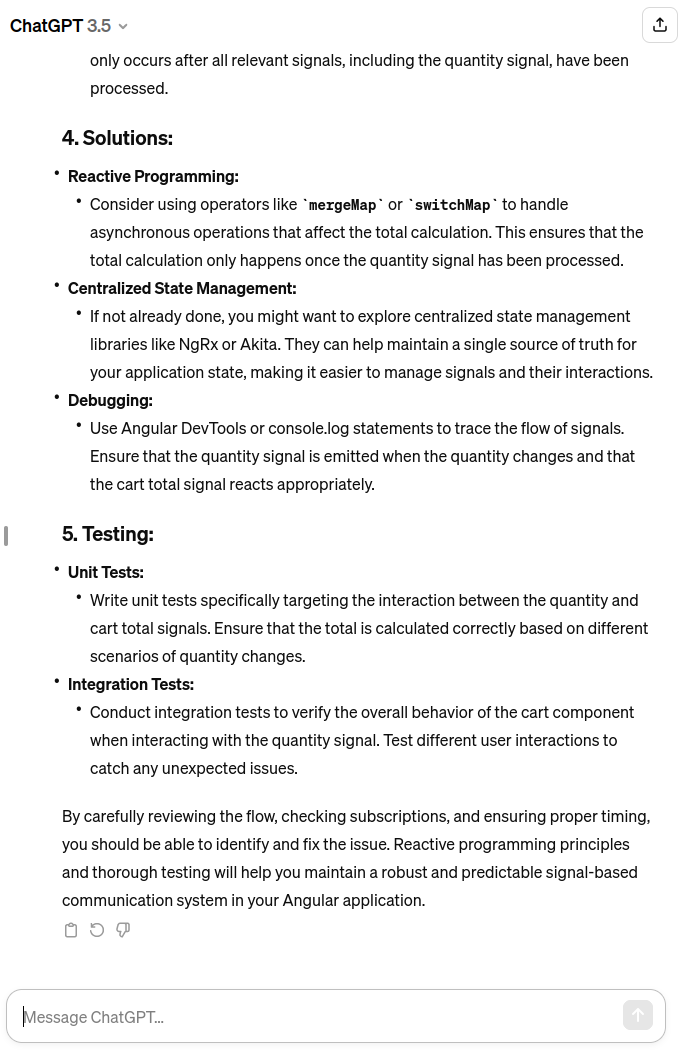

"Imagine you are a seasoned Angular developer who has been using signals extensively in your applications. You are currently working on an e-commerce application that allows users to add items to a shopping cart. The app uses Angular signals to track the state of the shopping cart, including the selected items and their prices and quantities. The app is running into an issue where when the user updates the quantity for an item in the cart, the total cart price does not always update correctly in the UI. It seems that the component responsible for displaying the cart total is not properly reacting to changes in the quantity signals. Please explain what could be causing this error, walking step-by-step through how the quantity change should flow through the signals. Explain how the quantity signal notifies the cart total signal when the quantity changes. Suggest possible solutions to ensure the cart total properly reacts and recalculates when quantity signals change. Use simple terms in your explanation as if teaching a less experienced Angular developer how signals coordinate throughout an application."

In summary, this prompt:

Frames the context as an expert Angular developer building an e-commerce app with Angular signals

Describes a specific bug related to quantities and cart totals

Asks for a traced, step-by-step explanation of the signal flow

Encourages clear explanations and teaching of signals concepts

Requests possible solutions to resolve the bug

Again here it is irrelevant that the LLMs are trained on the related technology(Angular signals), and we will try just to get the relevant takeaways from the responses, open for interpretation of course.

Aria by Opera (embedded in the browser)

It took an approach to the problem similar to using RxJS Observables which makes sense given the similarities.

Bing Copilot at Microsoft Edge (embedded in the browser and in the search engine)

Similar to Aria it went full RxJS Observables.

Claude by Anthropic

Love the codeless approach to conceptually understand the problem instead of going deep in the hallucination.

Gemini by Google

Despite hallucinations in the diagnostics and the recent criticism about the recent woke results, the bonus suggestion about considering using a dedicated service to manage the shopping cart state is a very good architectural approach.

ChatGPT by OpenAI

It went full hallucinations with RxJS, but still the centralized state management is always a good suggestion.

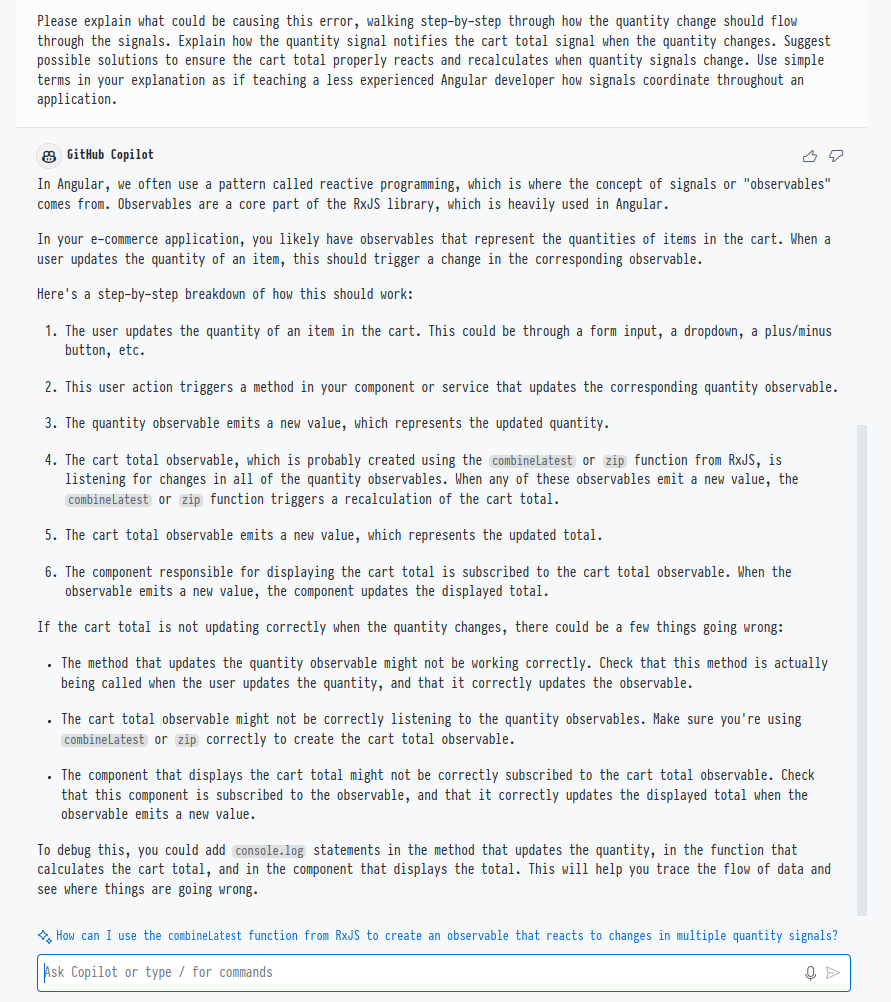

Copilot by Github (embedded in the code editor)

Probably the best of all given that the problem breakdown can give us a better understanding of the given problem to solve.

Conclusions

As solution seekers with suitable prompts for LLMs, we can use these AI assistants as virtual rubber ducks to clarify problems.

Prompt engineering techniques continue to improve, unlocking new use cases. Rubber duck debugging demonstrates the practical power of LLMs.

We don't need to build our own Retrieval Augmented Generation (RAG) model with the last languages, frameworks or API we use in order to get the benefits of assertive prompt engineering. We can make use of the current state of large language models (LLMs) even their limitations as virtual rubber ducks for debugging.

Even when setting the temperature to zero and obtaining strong hallucinations, your interaction with an LLM can unlock eureka moments ...bliss.